Offensive AI Recon: Master Metadata & API Security Testing

Introduction: The Critical Role of Offensive AI Recon

As artificial intelligence (AI) systems power everything from healthcare diagnostics to financial forecasting, they’ve become prime targets for attackers. Offensive AI Recon the process of gathering intelligence to identify vulnerabilities in AI systems is a vital skill for ethical hackers and security researchers. By focusing on Metadata Analysis, Model Card Examination, and API Enumeration, you can uncover weaknesses before they’re exploited.

This blog guide takes you from foundational concepts to expert-level strategies, offering practical examples, step-by-step commands, and realistic scenarios grounded in real-world AI security challenges. Whether you’re new to AI security or a seasoned professional, you’ll gain actionable insights to secure AI systems effectively.

Table of Contents

Getting Started with Offensive AI Recon

What is Offensive AI Recon?

Offensive AI Recon involves collecting information about AI systems to identify vulnerabilities. Unlike traditional reconnaissance targeting networks or web apps, AI recon focuses on machine learning models, their documentation, and APIs. The three pillars are:

- Metadata: Details about datasets, model architectures, or training environments.

- Model Cards: Documents outlining a model’s purpose, performance, and limitations.

- APIs: Endpoints exposing AI functionality, often prone to misconfigurations.

Why Recon Matters

Reconnaissance is the foundation of ethical hacking, revealing attack surfaces like data leaks, model biases, or API vulnerabilities. It’s the first step in securing AI systems.

Essential Tools for Beginners

- Browser Developer Tools: Inspect websites hosting AI models for metadata or API clues.

- cURL: A command-line tool to fetch files or test endpoints.

- Python with

requests: A simple way to automate recon tasks.

🤖 Hacker’s Village – Where Cybersecurity Meets AI

Hacker’s Village is a next-gen, student-powered cybersecurity community built to keep pace with today’s rapidly evolving tech. Dive into the intersection of artificial intelligence and cyber defense!

- 🧠 Explore MCP Servers, LLMs, and AI-powered cyber response

- 🎯 Practice AI-driven malware detection and adversarial ML

- ⚔️ Participate in CTFs, red-blue team simulations, and hands-on labs

- 🕵️♂️ Learn how AI is reshaping OSINT, SOCs, and EDR platforms

- 🚀 Access workshops, mentorship, research projects & exclusive tools

Exploring Metadata: Laying the Foundation

Metadata provides critical context about an AI model, such as its dataset or configuration. Let’s start with simple techniques to locate and understand metadata.

Finding Metadata

Platforms like Hugging Face host AI models with accessible metadata. For example, let’s explore the bert-base-uncased model.

Command: Fetch Metadata with cURL

curl -O https://huggingface.co/bert-base-uncased/raw/main/config.jsonThis downloads the model’s configuration file, a common metadata source.

Understanding Metadata

Open config.json in a text editor to find details like:

{

"model_type": "bert",

"hidden_size": 768,

"num_attention_heads": 12,

"num_hidden_layers": 12

}- Model Type: Indicates the architecture (e.g., BERT).

- Hidden Size: Suggests model complexity.

- Layers and Heads: Reveal structural details.

Practical Takeaway

Focus on locating metadata files and interpreting basic details to understand the model’s structure and potential weaknesses.

Realistic Scenario

A security researcher analyzing a public NLP model on Hugging Face noticed its dataset_info.json referenced a publicly accessible dataset. The dataset contained unfiltered user comments, raising concerns about potential biases affecting model performance, prompting the developers to review their data pipeline.

Building Skills in Metadata Analysis

With foundational knowledge, let’s dive deeper to uncover sensitive information or misconfigurations.

Automating Metadata Extraction

Use Python to fetch and parse metadata programmatically:

import requests

import json

url = "https://huggingface.co/bert-base-uncased/raw/main/config.json"

response = requests.get(url)

if response.status_code == 200:

metadata = json.loads(response.text)

print("Model Type:", metadata.get("model_type"))

print("Hidden Size:", metadata.get("hidden_size"))

else:

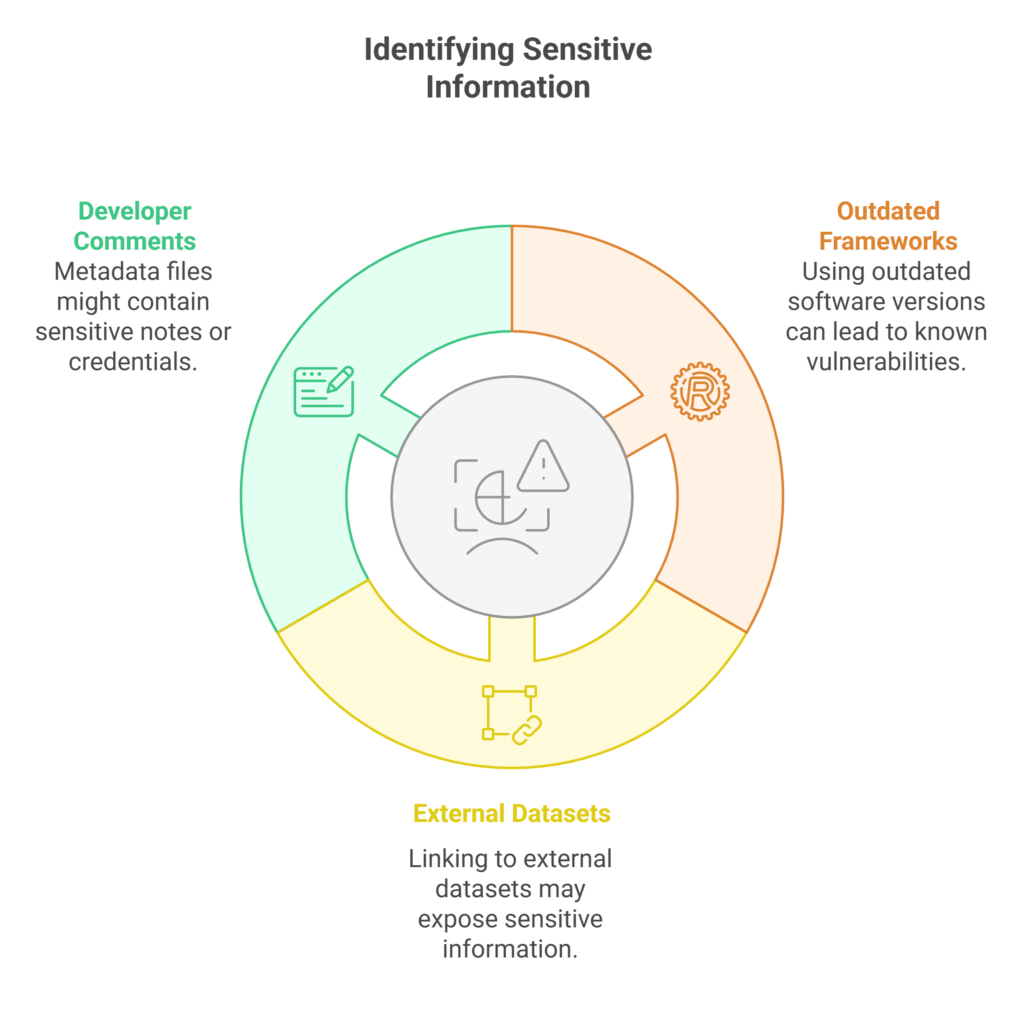

print("Failed to fetch metadata.")Identifying Sensitive Information

Look for:

- Training Framework: Outdated versions (e.g., TensorFlow 2.3) may have known vulnerabilities.

- Dataset Sources: Links to external datasets could expose sensitive data.

- Comments: Developers may leave notes or credentials in metadata files.

Cross-Referencing Vulnerabilities

Search for specific keywords using grep:

grep -i "tensorflow" config.jsonIf an outdated framework is referenced, check for CVEs on sites like cve.mitre.org.

Realistic Scenario

A security team analyzing a computer vision model’s metadata found a reference to an AWS S3 bucket with overly permissive access. The bucket contained training images, including sensitive customer photos, which were accessible due to a misconfiguration, leading to immediate remediation by the organization.

Mastering Metadata Analysis Techniques

Let’s explore advanced methods to exploit metadata findings and identify complex vulnerabilities.

Parsing Complex Metadata

Use jq to analyze detailed JSON files:

jq '.dataset_info' dataset_info.jsonLook for dataset size, preprocessing steps, or source URLs, which can indicate bias or data leakage risks.

Testing for Model Inversion

If metadata suggests a small dataset or simple architecture, test for model inversion attacks using the adversarial-robustness-toolbox:

from art.attacks.inference import ModelInversionAttack

from transformers import AutoModelForSequenceClassification

model = AutoModelForSequenceClassification.from_pretrained("bert-base-uncased")

attack = ModelInversionAttack(model)

inferred_data = attack.infer(input_data)

print("Inferred Data:", inferred_data)This attempts to reconstruct training data, leveraging metadata insights.

Correlating with External Data

Cross-reference metadata with public data breach repositories (e.g., HaveIBeenPwned) to check if training data was compromised.

Realistic Scenario

A red team analyzing a medical diagnostics model found metadata indicating a small training dataset sourced from a single hospital. By testing for model inversion, they demonstrated the risk of reconstructing patient data, leading the organization to diversify its dataset and implement stricter access controls.

Exploring Model Cards: Uncovering AI Insights

Model cards are documents detailing an AI model’s purpose, performance, and limitations, making them an accessible recon entry point.

Locating Model Cards

Visit a model’s repository (e.g., https://huggingface.co/bert-base-uncased) and look for the README.md or model card section.

Extracting Key Information

Review the card for:

- Intended Use: The model’s purpose (e.g., sentiment analysis).

- Performance Metrics: Accuracy or F1 scores.

- Limitations: Known weaknesses, such as poor performance on specific inputs.

Testing Limitations

If the model card notes a weakness in negative sentiment, test it:

from transformers import pipeline

nlp = pipeline("sentiment-analysis", model="bert-base-uncased")

result = nlp("This product is terrible!")

print(result) # Check for misclassificationPractical Takeaway

Model cards provide quick insights into testable weaknesses without requiring advanced tools.

Realistic Scenario

A researcher reviewed a model card for a chatbot model, noting it struggled with informal language. Testing with slang-heavy inputs revealed inconsistent responses, prompting developers to fine-tune the model for better handling of diverse inputs.

Building Skills in Model Card Analysis

Let’s move to systematic model card analysis to guide targeted testing.

Scraping Model Card Content

Automate extraction with Python and BeautifulSoup:

from bs4 import BeautifulSoup

import requests

url = "https://huggingface.co/bert-base-uncased"

response = requests.get(url)

soup = BeautifulSoup(response.text, "html.parser")

model_card = soup.find("div", class_="model-card-content")

print(model_card.text if model_card else "Model card not found.")Analyzing for Weaknesses

Look for:

- Bias Information: Demographic biases can be exploited with adversarial inputs.

- Performance Gaps: Low scores on specific tasks suggest vulnerabilities.

- Deployment Details: Cloud providers or APIs mentioned can guide further recon.

Crafting Adversarial Inputs

If the card notes bias in mixed sentiment, test it:

nlp = pipeline("sentiment-analysis", model="bert-base-uncased")

test_input = "This product is awful, but I love the brand!"

print(nlp(test_input)) # Test for biasRealistic Scenario

A security team analyzed a model card for a recommendation system, noting poor performance on niche categories. By crafting inputs targeting these categories, they triggered irrelevant recommendations, leading the organization to improve its training data diversity.

Mastering Model Card Analysis Techniques

Advanced model card analysis involves strategic exploitation and chaining findings with other recon methods.

Deep Text Analysis

Use NLP to analyze model card text for hidden clues:

from textblob import TextBlob

with open("model_card.txt", "r") as f:

text = f.read()

blob = TextBlob(text)

print("Sentiment:", blob.sentiment) # Analyze tone

print("Keywords:", blob.noun_phrases) # Extract key termsExploiting Limitations

If the card mentions poor performance in low-light image recognition, craft adversarial images using foolbox:

import foolbox as fb

import torch

model = torch.hub.load("pytorch/vision", "resnet18", pretrained=True)

fmodel = fb.PyTorchModel(model, bounds=(0, 1))

attack = fb.attacks.LinfPGD()

adversarial_image = attack(image, label)Chaining with API Recon

If the card references an API, pivot to API enumeration to test for vulnerabilities.

Realistic Scenario

A red team analyzed a model card for a facial recognition system, noting reduced accuracy for certain lighting conditions. By crafting adversarial inputs mimicking those conditions, they bypassed authentication in a controlled test, leading to enhanced model robustness.

Exploring API Enumeration: Probing AI Endpoints

APIs are critical to AI systems, making them a prime recon target.

Discovering Endpoints

Use browser DevTools to inspect network requests:

- Open Chrome DevTools (F12).

- Visit the AI application’s website.

- Check the “Network” tab for API calls (e.g.,

https://api.example.com/v1/models).

Testing with cURL

Send a simple request:

curl -X GET https://api.example.com/v1/modelsCheck for model names or versions in the response.

Identifying Issues

Look for:

- Unauthenticated Access: Does the endpoint respond without credentials?

- Exposed Data: Does the response include sensitive information?

Practical Takeaway

API enumeration starts with finding and testing endpoints to understand their behavior.

Realistic Scenario

A tester discovered an API endpoint (/v1/status) on a public AI platform that returned server uptime and version details without authentication, exposing potential attack vectors for further reconnaissance.

Building Skills in API Enumeration

Systematic API enumeration uses tools to discover and test endpoints efficiently.

Automating Endpoint Discovery

Use gobuster to find API endpoints:

gobuster dir -u https://api.example.com -w /usr/share/wordlists/dirb/common.txtTesting Authentication

Check for weak authentication:

curl -X POST https://api.example.com/v1/inference -d '{"input": "test"}'Checking Rate Limits

Test rate limits with rapid requests:

import requests

for i in range(50):

response = requests.get("https://api.example.com/v1/inference")

print(f"Request {i}: {response.status_code}")Realistic Scenario

A security team found an API endpoint (/v1/config) on an AI platform that exposed model configuration details without proper authentication, allowing them to identify outdated dependencies and recommend patches.

Mastering API Enumeration Techniques

Advanced enumeration chains API findings with other recon data to exploit vulnerabilities.

Deep Endpoint Analysis

Use Burp Suite to intercept and analyze API requests, looking for hidden parameters or headers.

Exploiting Misconfigurations

Test for data leakage:

import requests

endpoints = ["/v1/models", "/v1/inference", "/v1/debug"]

base_url = "https://api.example.com"

for endpoint in endpoints:

response = requests.get(base_url + endpoint)

if response.status_code == 200:

print(f"Endpoint {endpoint}: {response.text}")Chaining with Metadata

If metadata reveals an API’s framework, test for framework-specific exploits (e.g., Flask debug endpoints).

Realistic Scenario

A penetration test revealed a /v1/logs endpoint on an AI platform that inadvertently exposed training logs, including dataset metadata. By correlating this with model card analysis, the team identified potential data leakage risks, leading to stricter endpoint access controls.

FAQ:

What is Offensive AI Recon?

It’s the process of gathering intelligence on AI systems to identify vulnerabilities in metadata, model cards, and APIs.

How do I start with metadata analysis?

Fetch metadata files (e.g., config.json) from model repositories using curl or Python.

Why are model cards useful for recon?

They reveal a model’s limitations, biases, and deployment details, guiding targeted attacks.

What tools are best for API enumeration?

Use curl, Burp Suite, gobuster, or Python’s requests library for endpoint discovery and testing.

Is Offensive AI Recon legal?

Yes, if conducted with permission as part of ethical hacking or penetration testing.

How can organizations prevent recon attacks?

Secure metadata, limit model card disclosures, and enforce strong API authentication and rate limiting.

How does recon evolve with experience?

Beginners focus on simple data collection, while experts chain findings for sophisticated attacks.

Conclusion:

Offensive AI Recon empowers security professionals to secure AI systems by uncovering vulnerabilities in metadata, model cards, and APIs. By progressing from exploring foundational techniques to building systematic skills and mastering advanced strategies, you can identify and mitigate risks effectively. Key takeaways:

- Start with tools like curl and browser DevTools to explore metadata and APIs.

- Build skills with Python automation and targeted model card testing.

- Master techniques by chaining findings for complex attacks, like model inversion or API exploitation.

- Always conduct recon ethically with proper authorization.

With AI systems increasingly targeted, mastering Offensive AI Recon ensures you can protect critical technologies from emerging threats. Explore more blogs on AI Security and Cybersecurity here to stay ahead of the curve.